Immersive Imagination: Crafting AI-Powered Spatial Experiences

Using Gen AI to build a multimodal immersive experience

Imagine stepping into a room where the space around you transforms based on your words and imagination. You can interact with the environment, explore new worlds and watch your ideas come to life. This is the vision behind our AI-driven “holodeck,” an immersive, multimodal experience designed to let visitors intuitively create immersive environments.

Inspired by the fictional holodeck from Star Trek, a team at frog, the Capgemini Applied Innovation Exchange (AIE) and Synapse studio in Mission Rock, San Francisco has brought this iconic concept closer to reality, blending expertise in generative AI, immersive spaces and innovation into a single experience.

Inspired by the holodeck

Star Trek‘s holodeck was presented as a virtual environment capable of creating highly realistic simulations. Characters could interact with these simulations as if they were real, immersing themselves in new worlds, historical moments or other spatial environments. The concept of a space that can respond dynamically to a user’s imagination or natural language input has inspired innovations across industries and was a reference for our AI-driven experience.

While the Star Trek holodeck remains a fictional device, there have been attempts to create collectively immersive, interactive environments in recent years.

The evolution of virtual reality over the past 10 years has allowed developers and companies like Nvidia to explore holodeck-like systems that leverage high-resolution immersive headset experiences. A key aspect of the Star Trek holodeck is collective immersion without the need for a headset that is achievable through an immersive physical space. Spatial immersion has gained popularity over the last few years through cultural, retail and entertainment applications, culminating with the opening of the Sphere in Las Vegas.

By integrating multimodal generative AI (Gen AI) with an immersive space, we allow users not just to see and interact with virtual environments, but to shape them dynamically through natural language.

Our AI-driven holodeck is designed to introduce visitors, clients and industry leaders to the current capabilities of multimodal Gen AI in an engaging way. We built the initial prototype experience in a few weeks from idea to fully functional application so we could introduce global corporate leaders to the power of current state Gen AI during a leadership summit in San Francisco. The system leverages the most recent foundational LLMs, natural multi-lingual speech processing and multi-modal APIs to demonstrate some of the most recent innovations in AI capabilities. The goal is to immerse visitors across industries in the capabilities and potential applications of Gen AI while inspiring new ways of thinking about this technology.

Innovation under one roof

Our vision came to life at our innovation hub at Mission Rock in San Francisco that opened in 2024. Under one roof, we’ve united three core teams: frog, Synapse and Capgemini’s Applied Innovation Exchange (AIE). Together, these teams represent world-class design, engineering and applied innovation. The collaboration between their industry-leading expertise and capabilities is what powered the development of the AI-powered holodeck.

As part of the build-out of our new studio, we commissioned the installation of an Igloo Vision immersive space, an enclosed environment designed to deliver 360-degree visual experiences to facilitate collective immersion. In addition to enabling the development of the holodeck, the Igloo Vision space can be adapted for practical uses where virtual models of physical spaces or systems can be explored and manipulated alongside colleagues. We also leverage the space for building immersive prototypes of new convergent experiences on behalf of our innovative clients.

AI and multimodal creativity and expression

The AI landscape is evolving rapidly, opening new doors for multimodal creative expression. AI generates text, music, art, 3D artifacts or environments and audio-visual content in multiple languages. This convergence of creative tools is pushing the boundaries of how we define art, communication, and storytelling. It can empower artists, designers and even businesses to rapidly express and explore new ideas.

However, the implications of these advancements are complex. Issues around copyright and content ownership are still being debated, especially as AI tools generate vast amounts of “creative” output.

We are actively working on features that allow generated content to be cached and retrievable as part of the experience. This would allow users to experience worlds that were created previously without initiating the GPU cycles necessary to create new content with each use. Parallels exist with our client implementations of Gen AI and how customer experiences can provide guidance on whether a Gen AI or a legacy process is necessary.

Building the holodeck

Digital design

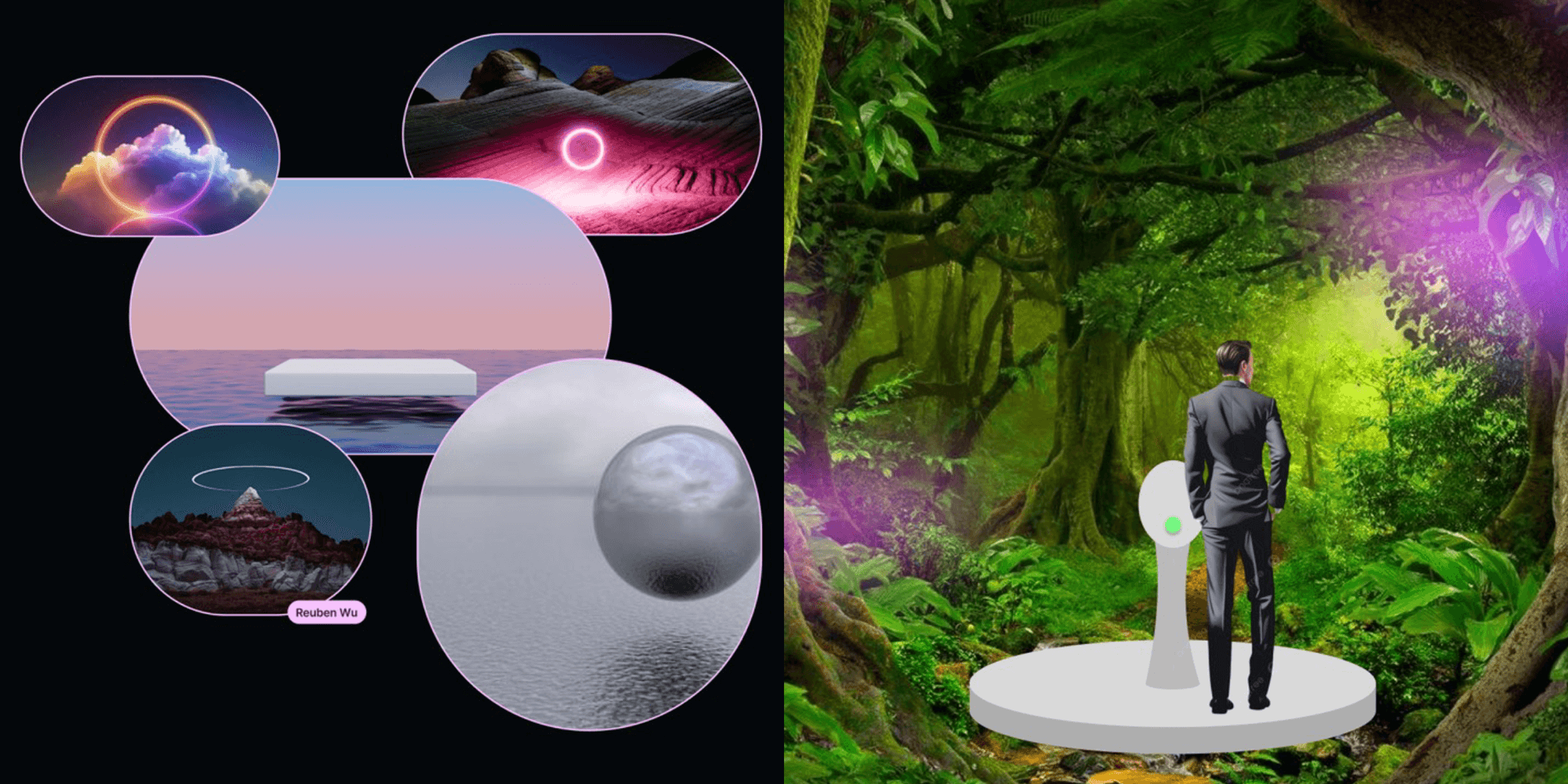

As the concepting phase progressed, the visual design went through multiple iterations. Explorations included defining design and animations for how the AI entity would visually represent conversation states for speaking and listening, how the entity would handle latency while generative assets were being created and how the system would transition from the default state environment to the environments created by the user and back again.

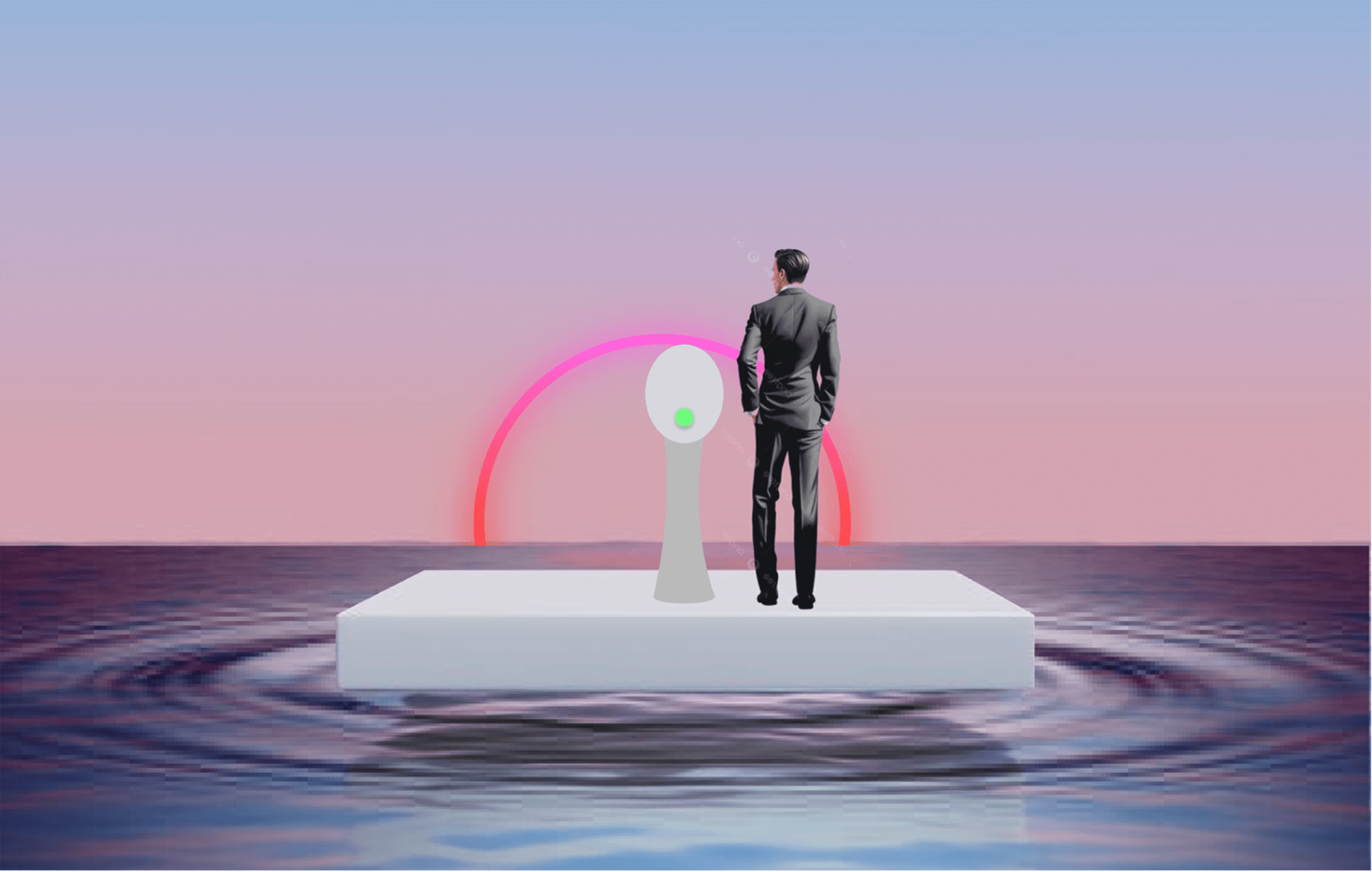

The design goal was to immerse users in a warm, imaginative environment that blends surrealist aesthetics with a tranquil, expansive ocean. Users are invited to stand on a platform suspended slightly above the calm, rippling water, which stretches infinitely into the horizon, creating an illusion of boundless space. Above the ocean hovers a bright gradient ring, reflecting in the water below and serving as the AI’s interactive interface.

Physical design

The team needed to account for limitations of call and response, natural language processing (NLP) at the time of initial production and the need to detect presence of the user in an intuitive way. A physical platform and button system was designed to help identify and isolate the user. The platform invites the selected user to drive the experience and an embedded proximity sensor triggers the system to welcome the user. The AI begins to speak and prompts the user to imagine a place by holding down the glowing button as they speak. When the button is released, the experience begins to generate a new environment based on what the user said.

System architecture

When a user speaks to the system, their voice is captured and transcribed using OpenAI’s Whisper model. This transcribed text then flows through our GPT-4 powered content generation system, which breaks down the input into three key components: scene descriptions for visual generation, narratives for voice-over and musical direction for ambient soundtracks.

The communication layer connects our physical and digital components through WebSocket and serial interfaces. It manages data flow between the platform sensors, control buttons and our React-based front-end application. This layer ensures that every user interaction— from stepping onto the platform to pressing the control button – receives an immediate response, maintaining fluid interactivity throughout the experience.

When a user describes a scene—for example, “a peaceful beach at sunset”—the content is routed through multiple external AI services. The scene description is sent to Skybox for generating immersive 360-degree environments, the narrative is processed by ElevenLabs for natural voice synthesis and musical direction is handled by Suno for ambient soundtrack creation. These processes run in parallel while our React application coordinates their outputs through state management and delivers them to the Igloo display system via React Three Fiber components and our audio mixer.

Technical implementation insights

Our implementation tackles several interesting technical challenges. We use React Three Fiber to integrate Three.js with React’s component lifecycle, allowing us to handle complex 3D scenes declaratively.

State management was another crucial consideration. Rather than using external state management libraries, we implemented a custom reducer pattern that handles both synchronous UI states and asynchronous AI processes. This approach allows us to manage complex state transitions—for example, when a user steps onto the platform, the system progresses through multiple states (idle to listening to processing to rendering) while maintaining responsive UI feedback.

The audio pipeline presented unique challenges in synchronization. We developed a custom audio mixer component that handles multiple concurrent audio streams—voice-over narratives and ambient music—with dynamic volume adjustment based on the user’s position and system state. The mixer uses Web Audio API for low-latency processing and seamless transitions between different audio states.

Error handling and recovery were built into every layer of the system. The WebSocket connection includes automatic reconnection logic with exponential backoff, while the AI service calls are wrapped in a retry mechanism that can gracefully degrade functionality if specific services become unavailable. All system events are logged to a Slack channel for real-time monitoring and debugging.

Looking ahead to the future

The holodeck is an example of the type of forward-looking experiences a talented team can build on a short timeline when empowered by AI. It has delighted visitors to events and studio workshops. In recent months, we’ve hosted executive and design team members from a huge range of Fortune 500 companies, an after party for Figma’s San Francisco-based Config conference, the leading Llama Lounge AI startup community, San Francisco Design Week participants, San Francisco Creative Mornings design community guests. It has garnered inquires from many as to how they can install something similar in their companies. Please reach out if you have questions.

As successful as the experience has been, there are many directions we are already pushing to take the next version of the holodeck. We are looking to browse saved or archived worlds and sessions for retrieval leveraging natural language.

We are investigating ways to create individual 3D-modeled objects like more immersive and diverse sound effects. AI interaction is also pushing into more “real-time” conversation paradigms with the introduction of advanced voice mode, the real-time voice APIs from OpenAI and Google. We have been working with numerous clients on ways to add real-time voice interaction to user experiences and see this as an emerging user expectation in 2025 and beyond. AI-generated video and animation is also seeing rapid advancements with the introduction of Veo 3 and the evolution of Runway and is another content type we are tracking closely for immersive applications as latency is reduced over time. We are also actively developing agentic decision making and workflows that assist users in interacting with the physical world and environments.

As we look to the future, our AI-driven holodeck may evolve beyond entertainment or creative applications. It could become a powerful tool for industries like engineering, architecture and healthcare. Imagine using this technology for real-time simulations of engineering projects or virtual walkthroughs of architectural designs before they’re built. With digital twin technology, entire cities or ecosystems could be modeled and explored interactively, allowing leaders to make more informed, data-driven decisions.

This AI-powered holodeck is not just a glimpse into what technology can do today—it’s a vision of how we will interact with our world in the future.

Project Leads – Josh Baillon, Charles Yust, Ryan Starling, Antonello Crimi

Digital Design – Will Schroeder, Antonello Crimi

Physical Design/Build and Firmware – Adam Kingman, Julian Mickelson, Ryan Starling

Graphics Software and AI Development – Kaz Saegusa, Anastasia Zimina, Charles Yust

Charles is an Executive Design Technology Director and emerging technology lead focused on the architecture, design and development of state-of-the-art human-centered solutions for innovative companies and influential cultural institutions. He has designed and built digital experiences at various scales, from installations in spatial environments to mobile, online, and mixed reality platforms. He leads Gen AI initiatives and strategy and has worked with leading companies and retailers to understand how AI can be leveraged to create or enhance new experiences and processes. Prior to frog he received an MFA with honors in Design and Technology from Parsons and worked for two award-winning NYC design firms building digital experiences for leading institutions.

Kaz is a passionate hybrid of designer and technologist with over 10 years of experience bridging the excellence of design and experience. His passion is to bridge emerging technologies and trends into crafting visionary concepts and developing them into innovative experiences. At frog Kaz has engaged in a wide range of initiatives, from crafting visions and concepts, research, experience design and development to product delivery to the market in industries such as entertainment, telco, media, retail, to name a few. Prior to moving to the U.S., Kaz was based in Tokyo for 25 years until he received his Master of Engineering in Intermedia Art and Science from Waseda University.

Anastasia is a forward-thinking solutions architect and technologist with diverse experience in the tech industry. She is known for her ability to forge meaningful, lasting relationships with clients. Anastasia’s methodical problem-solving skills enable her to delve deep into challenges, ensuring comprehensive solutions that address core issues. Her professional journey is marked by a focus at the intersection of software development and design, striving to maximize the impact of the products she helps bring to life. Anastasia has deep expertise in rapid prototyping, Unity development, and Generative AI.

Josh Baillon is a Director at Capgemini’s Applied Innovation Exchange (AIE) in San Francisco, where he works closely with VCs, startups, and thought leaders to develop breakthrough concepts in agentic AI, immersive experiences, blockchain, robotics, and quantum computing. Previously, he co-founded Nestlé’s Silicon Valley Innovation Outpost, building digitally-enabled business models and personalized nutrition solutions. A curious problem-solver passionate about innovation, Josh believes the best way to predict the future is to create it. He began his career in management consulting and founded an animation startup along the way. In his free time, he enjoys flying as a private pilot.

We respect your privacy

We use Cookies to improve your experience on our website. They help us to improve site performance, present you relevant advertising and enable you to share content in social media. You may accept all Cookies, or choose to manage them individually. You can change your settings at any time by clicking Cookie Settings available in the footer of every page. For more information related to the Cookies, please visit our Cookie Policy.