Harnessing Gen AI at the Edge

The edge is poised to be the next frontier for Gen AI.

Generative AI (Gen AI) use and expectations have skyrocketed over the past year. In large part, this is due to the foundational, disruptive change it is driving for companies, products and customers across industries. In fact, the market is expected to grow at a CAGR of 42% over the next 10 years to USD 1.3T.

To date, Gen AI has primarily been focused on use cases that leverage Large Language Models (LLMs) to generate useful text, images, audio and now video content based on prompts for mass-market applications on the web. The shift from searching for information based on a query to generating useful content in response to a prompt is a paradigm that will remain a significant area of opportunity and disruption for years to come.

Predicting the next steps of this market is a bit like coming up with use cases for electricity after only seeing the first lightbulb illuminate. That said, one trend that is becoming clearer is that the edge is poised to be the next frontier for Gen AI.

Why is the edge the next frontier for Gen AI?

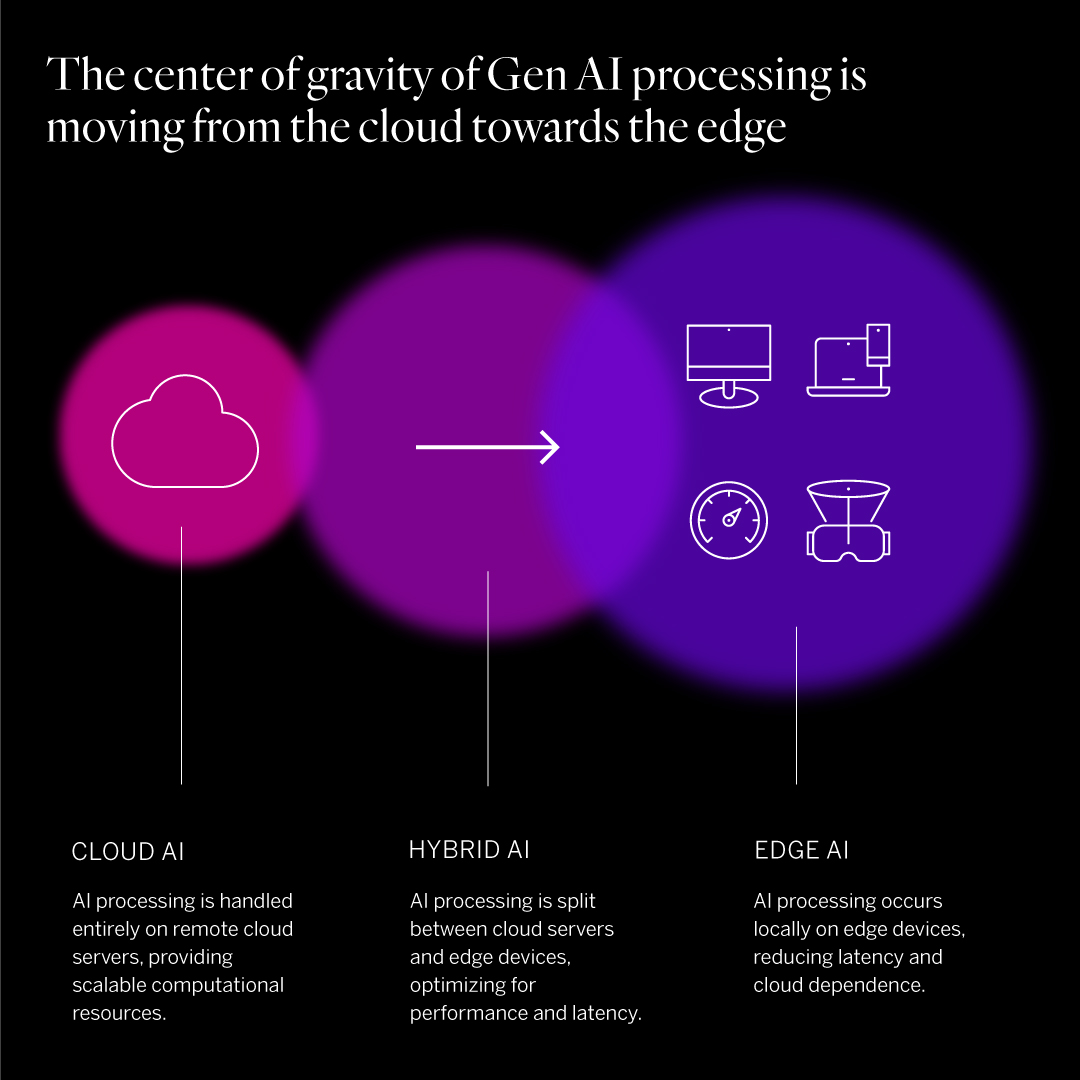

The edge is where the digital and physical worlds intersect—it is our phones, our laptops, and our connected devices, including sensors and robotic actuators that digitize and alter the physical world.

Costs favor smaller models on edge processors for many applications.

AI models are becoming increasingly sophisticated. Big tech players competing to lead the mass-market Gen AI space see the imperative to develop increasingly powerful AI with ever more training data to win this gold rush. In just a few years, the number of parameters models (e.g., GPT, Gemini, Mistral and Llama) rely on has increased over 1,000 times and their Massive Multitask Language Understanding (MMLU) benchmark scores—a measure of model knowledge similar to a high school equivalency exam—have increased threefold.

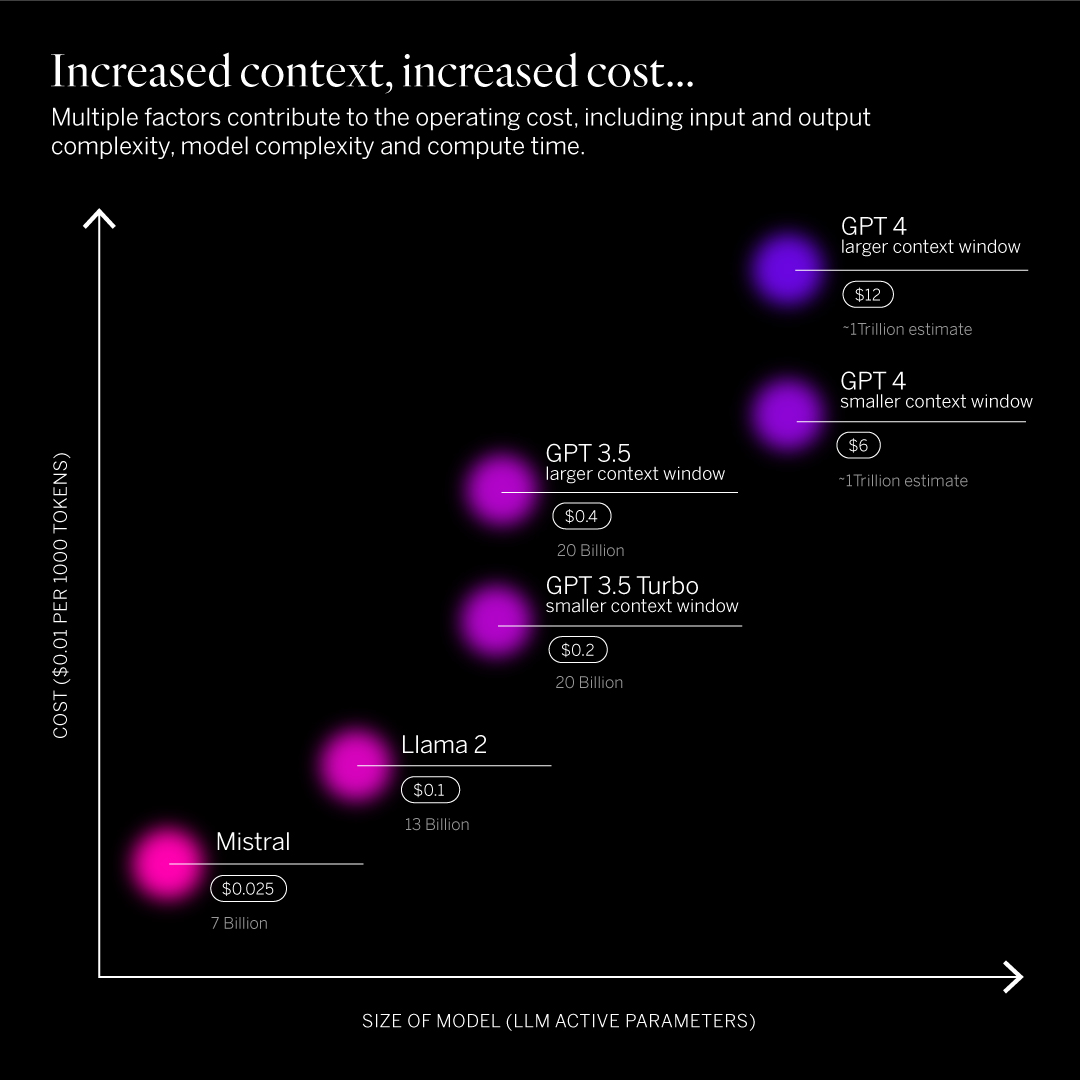

This makes sense. These models need to be able to handle everything from suggesting recipes based on ingredients to generating professional headshot images based on a photo. The desire to secure mass-market leadership has also been trumping cost optimization for these commercial applications, which is a trend that will continue. These increasingly powerful and sophisticated models can cost multiple cents per query to run, with the cost varying based on the size and nature of the prompt and output. This is driving a massive arms race for compute power as well, sending NVIDIA’s stock price into the stratosphere.

But for most businesses and applications, these top-end models are not necessary. As an application becomes more specific, the universe of information and the sophistication of the model required to generate a useful response reduces. We have also seen so much progression in model performance that even “small” models, such as Gemini Nano, are reaching similar MMLU performance numbers to the “large” language models of less than two years ago, such as GPT-3. To put it concretely, to create a helpful customer support chatbot, only conversational language, documentation for the specific product and perhaps transcripts from user support calls might be required—not poetry, music, images, etc. This reduces the demands on the model significantly. Tools like Edge Impulse now enable developers to easily leverage large models like GPT-4o to train custom models that are many orders of magnitude smaller to perform narrower functions on edge device processors with much lower latency.

At the same time, platform subscription fees for services like CoPilot and cost-per-query dynamics for custom model applications provide strong incentives to right-size models and architect them for efficiency. When running Gen AI on edge hardware, such as a user’s laptop, smartphone or connected device, the query is effectively free once the significant challenge of getting the model to deliver satisfactory performance despite limited local processing power and memory is solved. With these factors combined, we will increasingly see business- and product-specific applications leveraging Gen AI with smaller language models, both at the edge and in the cloud.

In the chart below, tokens represent a fragment of a word or character, and the cost of a query is determined by the number of tokens processed, meaning larger prompts or outputs involve more tokens, increasing the overall cost. LLM Active Parameters refer to the number of internal variables in a large language model that are used during processing. More parameters mean a larger, more powerful model, but also higher resource demands.

Gen AI is set to give rise to a new era of Internet of Things (IoT) with unique value at the edge.

As physical, analog creatures, we humans experience life, happiness and utility in the physical world. This is what makes the edge so powerful—it is our two-way window into the digital world (via everything from touchscreens to voice assistants to AR headsets) and the gateway through which action and utility enabled by data ultimately takes place. We might buy a ticket and check in for our flight on our phone, but we want to fly in a real plane to a real destination.

Edge processing and edge AI are not new concepts. Edge processing enables technology to perform on a device when cloud connectivity and processing is unreliable, too slow, too expensive or too risky. Whether an autonomous car is avoiding an obstacle, a robotic-assisted surgeon is making a delicate incision with force feedback, or a sensor is controlling a real-time industrial process at a remote plant, edge processing and AI already enable many valuable use cases.

Gen AI at the edge will unlock further frontiers of utility. Just as Gen AI is driving a paradigm shift from search engines providing a list of results to chatbots generating personalized content, Gen AI at the edge will be all about generating predictions, recommendations and content for users based on real-time context from the physical world plus knowledge from the digital one. This represents the step up in value that we have all been looking for from the IoT, which has too often failed to deliver compelling user value beyond quantification and connectivity—gadgetry masquerading as utility.

The possibilities are endless and exciting, with many already coming to life. They generally fall into two different categories: 1) products and services that deliver novel user interfaces and experiences and 2) products and services that take action.

User Interfaces and Experience

- Apple recently announced that it will be integrating ChatGPT into iOS 18, iPadOS 18, and macOS Sequoia, touting benefits to privacy for its users across domains of content creation.

- For a tourist, Gen AI at the edge can take an audio signal, remove background noise, and translate into a native language in real time, enabling a fluent conversation without language barriers.The Samsung Galaxy S24 is now offering this feature.

- For an industrial facility, Gen AI at the edge can enable text- and voice-based queries of real-time camera footage using machine vision to better monitor complex events, such as checking to ensure workers are wearing appropriate safety equipment.NVIDIA has already demonstrated this use case.

- For consumers, Gen AI at the edge can add significant utility via digital assistants and augmented reality devices like the Apple Vision Pro, Limitless Pendant, Rabbit R1, Humane AI Pin and the Brilliant Labs Frame glasses by generating recommendations and contextual information based on the user’s commands as well as their surroundings, all with low enough latency to avoid frustration.Qualcomm has demonstrated a large multi-modal model running on an Android phone.

- For retailers, Gen AI at the edge can be used to touch up and ready product images to post for ecommerce. Google recently demonstrated this capability built into Android for Shopify.

- For a physician, Gen AI at the edge can take multi-modal biometric sensor data from a patient along with voice input from the care team and medical training data to generate recommendations in real time, securely, without passing patient data to the cloud.

Action at the Edge

- For robotics, Gen AI at the edge can enable handling speech commands with ‘reasoning’ that goes well beyond repeatable task automation and enables robots to act as more seamless and useful assistants. The Figure humanoid robot recently demonstrated this ability leveraging its partnership with Chat-GPT to enable just this—receiving indirect prompts like “can I have something to eat” and reasoning based on available items in view. Embedding this Gen AI interface into the robot at the edge reduces latency and operating cost.

- For an autonomous vehicle, Gen AI at the edge can take sensor data (such as cameras and LiDAR systems) as an input and generate predictions about the motion of other objects to improve navigation. Previously, a machine vision system would have been able to identify a pedestrian, but now, Gen AI can predict that the pedestrian is moving across the crosswalk. This processing has to be done in a split second onboard the car at the edge.

Differentiated training data from the edge will become increasingly valuable.

Model development is already a minority of the work when developing most AI applications compared to data collection and processing, as well as testing and optimization. As readily available models become more and more capable, including via open-source solutions (e.g., Meta’s Llama) we can expect the focus to increasingly turn away from model development and toward these other efforts.

When focusing on business-specific applications, it is the data collection, tagging, cleaning and maintenance which are the primary challenge. While many business-specific applications will be able to leverage data that already exists in purely digital realms (e.g., in-house knowledge management based on all documents within a company’s directories), accessing and digitizing data from the physical world via connected sensors and devices is a key opportunity for companies looking to differentiate themselves in this gold rush.

Privacy concerns and restrictions will also be a key driver. According to the Capgemini Research Institute, 75% of consumers consider trust to be a key factor in their purchase decisions around connected products. This forces companies to not only consider privacy and security, but also to bring enough value to the customer that they are willing to provide their data in exchange for it. It’s easy to disable cookies. Once someone has welcomed a connected product into their home or business environment because of the value it brings, it is much harder to walk away.

Final thoughts: Be multidisciplinary.

Given the cost pressure and compute scarcity for LLMs in the cloud, combined with the opportunities for high-value use cases enabled through Gen AI and data collection at the edge, we should expect this space to take off in 2024 and beyond.

As this trend continues, companies must consider how to surf the wave. Because this area is still emerging, defining a strong strategy requires a multidisciplinary approach and team—engineers who can assess the feasibility of architecting and running models on resource-constrained processors at the edge, experience and strategy teams with an understanding of the new art of the possible and the user value proposition and business analysts who can calculate the trade-offs and benefits to ensure there is a Return on Investment (ROI).

As Executive Vice President and Global Head of Intelligent Products & Services for Intelligent Industry, Jeff brings together experts from across the global Capgemini Invent family, including frog, Synapse and Cambridge Consultants to imagine, make and scale the next generation of connected products and services.

Jeff has been with Synapse product development for 14 years and President for over 5 years, having previously worked as a software developer, entrepreneur, venture capital analyst, and strategy consultant. His technical background in mechanical and software engineering as well as his experience leading product development programs help him deeply understand the core of Synapse while leading the business.

Jeff holds Masters of Engineering Management (MEM) as well as BE and BA degrees in Mechanical Engineering from the Thayer School of Engineering and the Tuck School of Business at Dartmouth College. He’s an avid mountaineer, cyclist, runner, backcountry and Nordic skier and electric guitar player.

Kary focuses on emerging technology initiatives for Capgemini Invent, augmenting strategy and portfolio by creating technology solutions and services. Previously, Kary worked as a product lead at an AI startup in Paris and as an economic researcher for EU Horizon 2030.

His views on technology and economics can be found on TEDx, TheNextWeb, various podcasts, MIT Tech Review, WIRED, Harvard Business Review, World Economic Forum, Les Echos, L’Opinion, The Conversation, Let’s Talk Payments, Huffington Post, and La Presse+. His book “The Blockchain Alternative”(2017) is available at Springer-Apress & O’Reilly’s Safari.

Kary has been Associate Research Scientist with Cambridge, Senior Fellow at Ecole des Ponts and visiting lecturer at a few EU business schools. In a previous life, he served in the French Foreign Legion and was awarded the cross of valor for combat operations in Afghanistan.

Hugo Cascarigny has been passionate about AI, data and analytics since he joined Capgemini Invent 12 years ago. As a long-time member of the industries and operations teams, he is dedicated to transforming AI into practical efficiency levers within Engineering, Supply Chain, and Manufacturing. In his role as Global Data & AI Leader, he spearheads the development of AI and generative AI offerings across Invent.

We respect your privacy

We use Cookies to improve your experience on our website. They help us to improve site performance, present you relevant advertising and enable you to share content in social media. You may accept all Cookies, or choose to manage them individually. You can change your settings at any time by clicking Cookie Settings available in the footer of every page. For more information related to the Cookies, please visit our Cookie Policy.