Building Digital Superpowers with Blended Intelligence

Human actors working inside complex enterprise digital systems require unique forms of situational awareness and contextual interaction across the spectrum of data, information and insights to take on the appropriate amount of cognitive load for decision and action. Machine learning (ML) cannot yet fully replace human intuition and experience in many of these environments, but various methods of artificial intelligence offer an exceptional set of tools for delivering actionable insights and generating business value in a more efficient and automated manner.

It takes a multidisciplinary process and a team of designers, technologists and data scientists to create software platforms and user experiences that effectively blend the best of human reasoning and machine intelligence into one system built on transparency, scalability and trust. frog has helped clients in sectors as diverse as energy, industrial, financial services and healthcare develop AI-enabled—yet still human-centered—systems and solutions to achieve this balance. The current challenges and future opportunities for companies seeking to weave practical AI into their digital transformation strategies depend on thoughtfully putting AI in the hands of humans in order to enable “last mile” decision-making and action-taking based on levels of calculated certainty. Such complex human-machine interactions demand a design thinking approach to complement the building up of machine learning capabilities.

The Tale of the Tape

A healthy amount of alarm and concern has been raised about the integration of and delegation to AI in various industries, including its impact on human careers and livelihoods. Unfortunately, amidst hyped-up predictions of potential job losses, AI and machine learning often get confused or conflated with scripted robotic automation. The on-the-ground reality of how human and machine intelligences compare, contrast and ultimately complement each other is not as threatening as it seems. A closer look instead exposes opportunities for the design of more powerful and elegant software user experiences.

Taking a somewhat tongue-in-cheek look at current measurements of the raw processing capabilities and performance of the human brain compared to recent supercomputers, we certainly see stark differences in both their physical footprint (roughly one-third the size of a basketball versus a full-size basketball court) and power consumption (an energy-efficient 20 watts versus a power-hungry 10,000 kilowatts). Yet humans still maintain an order of magnitude advantage over supercomputers when loosely measured in operations per second (nearly 1 exaflop versus 0.25 exaflops), while using only a small fraction of the resources and remaining fully mobile.

While that may be a quantitative view of the current landscape, the practical reality of both human and artificial intelligence requires a more qualitative assessment. Humans can work across disciplines, bringing experience from multiple and sometimes disparate domains of knowledge to solve problems, while machine intelligence is mostly limited to single or closely related domains. Humans have plasticity and high variability in thought and action, while machines are much more rigid and excel primarily in delivering high precision. At the conscious level, humans are linear processors, while machines can be directed to run workstreams massively in parallel (for example, try to count the number of letters in your full name while simultaneously adding the first five odd numbers together in your head–impossible!). In positive and negative ways, humans are often influenced by the context of their current environment, even when relying on extreme skill, expertise and focus. Conversely, automated systems and machine intelligence can only be influenced by the factors humans plug into them or allow them to observe. The contextual biases that humans bring can certainly be a hindrance, but they can also be channeled into positive attributes when effectively paired with unbiased machine intelligence through high-quality software UX. With the right UI design and technology integration, humans and machines can fill each other’s skill gaps and check each other’s blind spots.

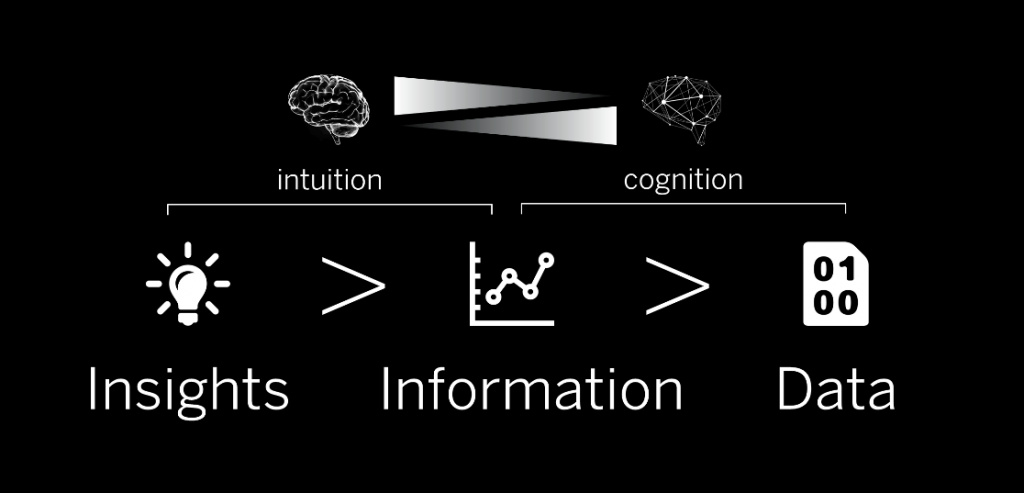

Insights > Information > Data

While AI is not yet a substitute for human intuition and experience, the increasing complexity and scale of data and information that must be managed has exposed that human-only manipulation and interpretation has reached its limits. However, in order to move beyond their current limitations, companies need to consider the human talent transformation that must occur parallel to any digital transformation initiative that has an AI or machine intelligence component. To aid this transformation of human talents, software platforms must be designed and built to be extensible and adaptive to the increasing capabilities of ML and AI.

Contrary to popular science fiction, the goal of most AI is not to replicate human-like behaviors and capabilities in order to replace the need for human intervention. While many AI systems do mimic human behaviors, the goal is to facilitate more seamless and natural interactions between AI and its human users. In fact, human intervention is necessary when machine processing stalls or fails, as demonstrated during the Apollo 11 moon landing in 1969. In the final minutes of the lunar module’s descent to the moon surface, its landing guidance computer became saturated with inputs and commands, blasted out alarm codes, then repeatedly crashed and rebooted. But Neil Armstrong intervened and landed the module manually. 50 years later, similar lessons hold true. Tesla and others in the automotive domain have achieved Level 2 autonomy with an impressive array of computer vision and real-time data processing, yet drivers still need to be hyper-aware with hands on the wheel and eyes on the road.

The actions of human intervention and interpretation alongside machine-driven automation and intelligence work across a spectrum of data, information and insights. Whether it’s raw and unstructured or processed and cleaned for human and/or machine readability, data in and of itself has no direct value. The adage “there is value in your data” is somewhat of a lie. At scale, data’s value only begins to appear when processed by machine intelligence for aggregation, organization and inference of information. Insights then come from evaluating such information in the larger context of human experience, from which conclusions can be drawn and action directed.

Across this spectrum, humans and machines have complementary strengths (and weaknesses). In terms of conscious processing, humans can generally rely on experience and inference to form meaningful insights, make decisions and take actions using a relatively small set of information and data points. Conversely, machine intelligence can process massive amounts of structured and unstructured data into valuable bits of information, but is currently limited in its ability to independently craft actionable insights without human verification. Given the current scale of raw data generation, machines can “see” beyond humans in their capacity for rapid and massive pattern recognition, which bridges the gap between data and information; humans, meanwhile, can “see” beyond machines in their ability to intuitively connect disparate domains and contexts, bridging the gap between information and insights. In order to maximize the capabilities of both working together, there must be transparent and trusted communication between them, expressed through the software user experience.

Designing for Blended Intelligence

Some enterprise software organizations are undertaking meaningful initiatives to infuse human-centered design into their digital transformations, but there is often a lack of collaboration with other initiatives within their data science and engineering teams. Design that blends the intelligence of humans and machines requires an approach that is multidisciplinary and distinct from the development of AI on its own, which is generally focused on mimicking human behavior through deep learning techniques running behind the scenes inside software automation. Blended intelligence as a design approach brings AI to the forefront of the experience, creating new patterns for user interaction within software systems that attempt to address human performance KPIs in terms such as reduced learning curves, more optimal decision making, shorter time on tasks, fewer critical errors, broader management scope, and greater scalability of workloads realized as a force multiplier.

When designing a platform for blended intelligence, it is vital to identify and model the modes of human behavior and interaction within the system of machine intelligence, since the granularity of the data and the fidelity at which the information is delivered is highly dependent on the context and needs of the user. Engineers can no longer take a “one-size-fits-all” approach to software design, even beyond the UI layer. For example, a user operating in a planning or analysis role often needs a higher level of abstraction and a lower amount of data and information density than a user monitoring or executing a discrete task or workflow where the details matter. The technological and design challenge becomes a balancing act, where providing either too much or too little data and information to the user in their current context and role can negatively impact the experience.

Designing and enabling AI systems that complement and empower human users requires a form of “digital empathy” to be encoded into their software. The human user must feel confident to delegate the processing and interpretation of data and information to the AI when the certainty of an outcome is high, and the AI must gracefully revert and defer to the “human in the loop” when the certainty is low or unknown. It is within these contextual handoffs of authority between humans and AI that the user experience can fall down if not considered and designed for as a whole.

Building Digital Superpowers

In designing and building a platform for blended intelligence, it is helpful to think of the creation of new digital capabilities in terms of giving humans “superpowers”–things AI can empower them to do beyond their natural talents while remaining in control of the overall outcomes.

X-Ray Vision

A first and foundational superpower is that of “X-ray vision,” or the ability to see through walls of data to identify meaningful patterns of both constraint and opportunity. In practical product design terms, it is about improving the signal to noise ratio with better data quality, entity model optimization and relevant analytics. The days of visually scanning rows of a spreadsheet to find the needle in the haystack are over. For today’s enterprise software experiences, the goal is to reduce the complexity and density of information displayed while allowing AI to be both a microscope and a telescope for the user. From a product experience perspective, the focus is no longer on how much the software can do all in one screen–in other words, no “kitchen sink” views–but rather on how well choreographed the interaction between the human and machine intelligence is within the system. An important design principle here is to ensure that data entities within the same UI view are correlated and connected, since representing multiple primary information domains within the same screen can lead to cognitive overload for the user.

Bionics

Another somewhat higher-order superpower is that of “bionics,” or the ability to lift or carry more than a human can manage alone. This typically involves creating scalable workflows through progressive automation systems in which machine intelligence is directed to observe a common repetitive process performed by a user and proactively generate recommendations for delegation of the workload. Whether it involves running millions of scenario simulations or brute force data input and organizational tasks, the workflow optimizations that machine intelligence can produce lead to the creation of more valuable and higher-order human user roles that combine multiple domains of knowledge and expertise. This means users can spend more time strategically developing insights and less time tactically manipulating data.

Mind Reading

Finally, and most importantly, the superpower of “mind reading” is the result of enabling situational awareness, transparency, believability and trust between human and machine intelligence, especially in complex and mission-critical workflows. From the AI or machine intelligence perspective, the ability to effectively monitor and evaluate the current user context and intent enables both the anticipation and recommendation of actions based on prior successes and failures. From the human intelligence perspective, the ability to directly inspect the data, logic and algorithms AI has used to generate specific insights on the user’s behalf builds the confidence required for the user to take full advantage of their superpowers in the service of their business mission. For subject matter experts with years of experience and training, welcoming AI into their software tools and workflows often requires such transparent “show your math” moments in the user experience to gain trust and accelerate adoption. AI enabled as a black box to users will ultimately fail for most industries. It takes the type of human-centered and multidisciplinary design offered by frog to blend the best attributes of both into innovative software experiences.

In the coming years, artificial intelligence and machine learning will become increasingly accessible, flexible and powerful, fundamentally transforming the way many businesses and industries operate. The biggest winners to emerge from this transformation, however, will not be those that adopt as much AI as quickly as possible. Rather, the winners will be those who can create robust and seamless blended intelligence systems that leverage the combined and complementary powers of both human and machine intelligence. That means a multidisciplinary, human-centered approach is the only way forward for any firm seeking to empower its employees and deliver better experiences through AI.

As Executive Technology Director at frog, Robert leads the experimental design and product delivery practice which specializes in accelerating the creative process with collaborative experimentation, ideation, prototyping, and validation of emerging technologies. With over 25 years of experience as a developer, architect, hacker, inventor, problem solver, and team leader, his portfolio of work represents a breadth and depth of technical expertise and passion for delivering innovative user experiences. He has led technology strategy, venture building, R&D, prototyping, architecture, and software development programs targeting web, mobile, desktop, embedded device, and Zero UI platforms for clients such as Microsoft, Intel, HP, AT&T, FOX Sports, Halliburton, and DARPA.

We respect your privacy

We use Cookies to improve your experience on our website. They help us to improve site performance, present you relevant advertising and enable you to share content in social media. You may accept all Cookies, or choose to manage them individually. You can change your settings at any time by clicking Cookie Settings available in the footer of every page. For more information related to the Cookies, please visit our Cookie Policy.